September 19, 2023: Microsoft recently faced a significant data exposure, spotlighting increasing concerns about the safety of artificial intelligence (AI) tech.

Cloud security firm Wiz revealed that Microsoft accidentally leaked a large amount of internal data totaling 38 terabytes (TB). The leak lasted from July 20, 2020, to June 24, 2023.

The Details of the Data Leak

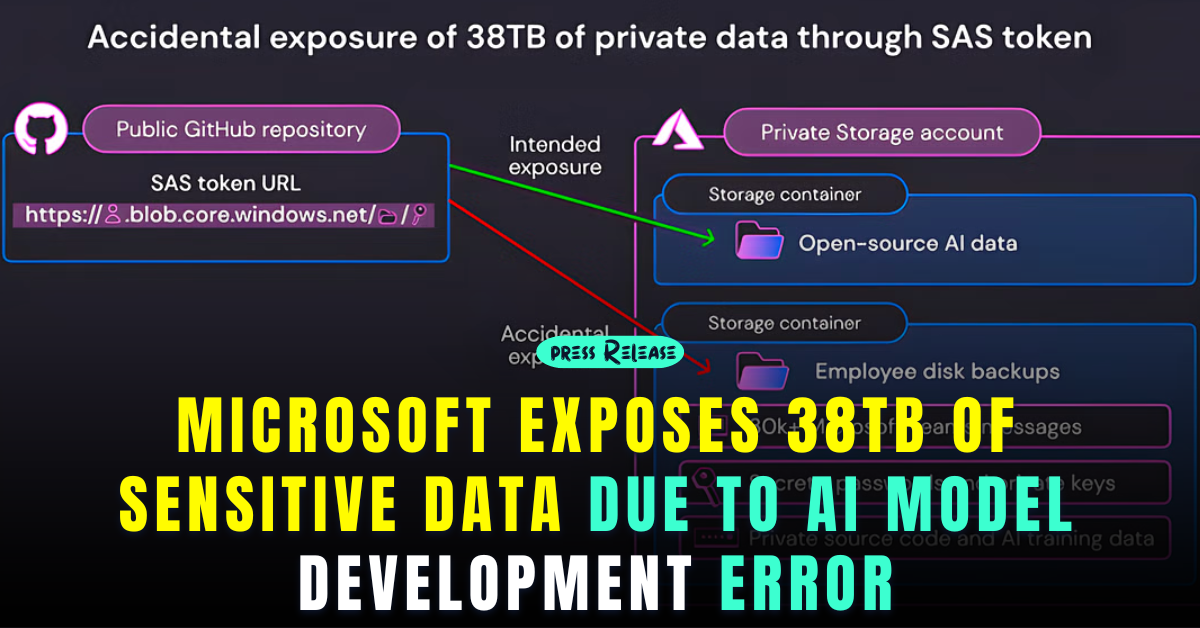

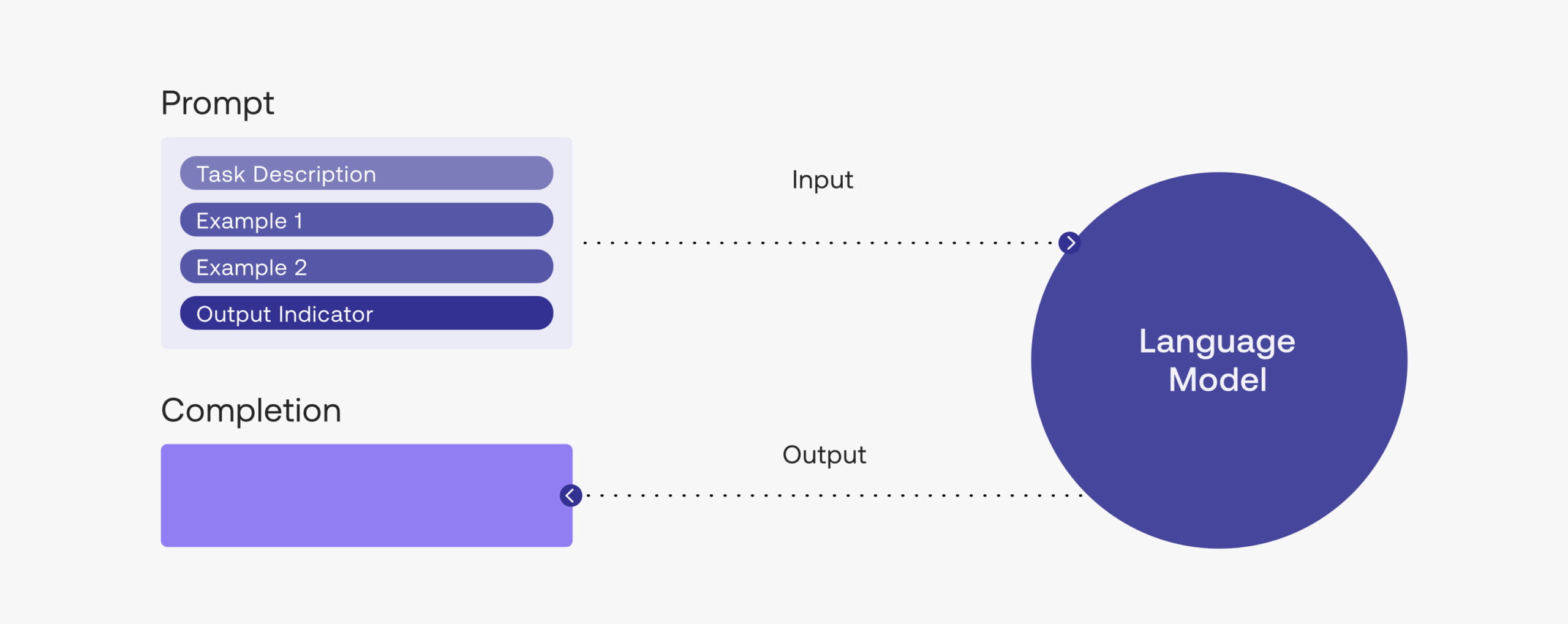

Wiz discovered that Microsoft made a big mistake on their cloud platform, Azure. This error was because of using a tool on Azure incorrectly. The data was on a public GitHub space where Microsoft’s AI team was doing their work.

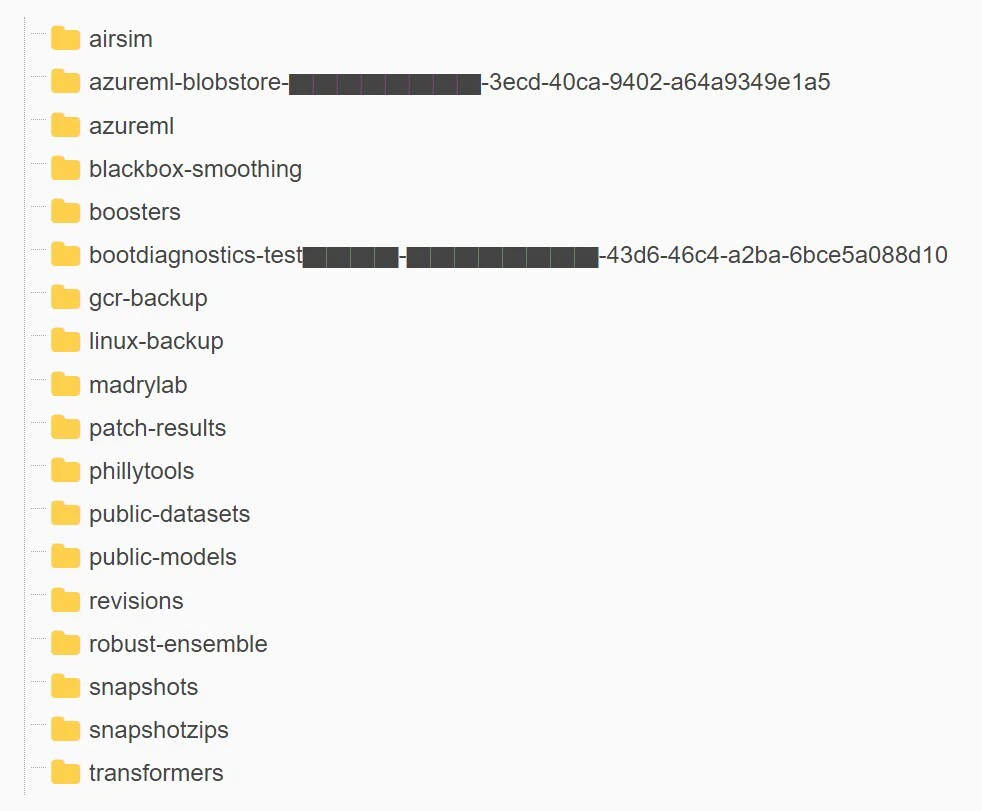

This public space, named “robust-models-transfer,” had codes and AI designs that anyone could see. But, it also showed things it should not have shown, like backups of employee computers, secret codes, passwords, and many chats from the Microsoft Teams app.

The Core Issue: Misuse of SAS Tokens

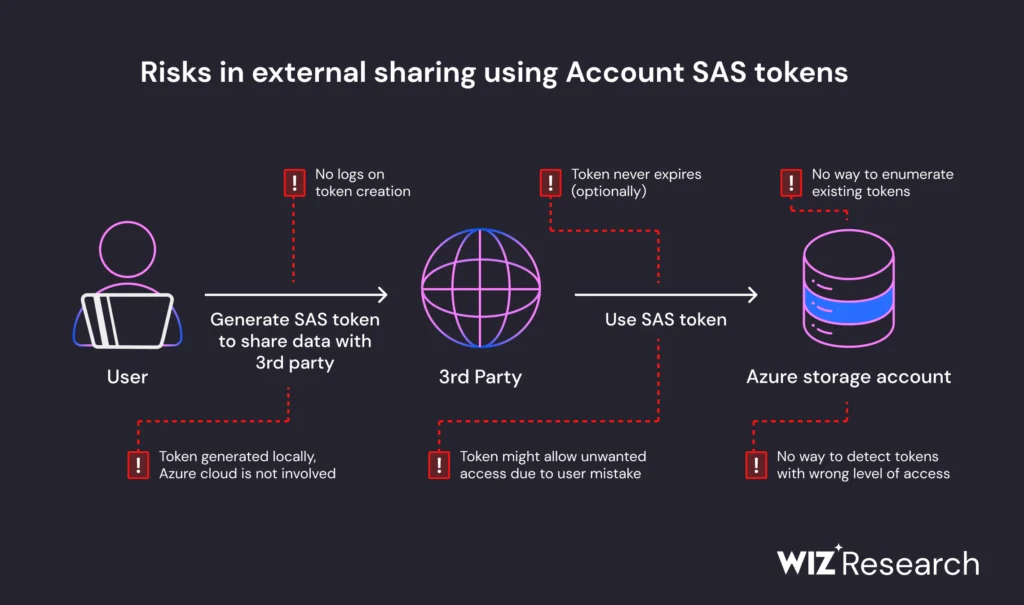

Microsoft used Shared Access Signature (SAS) tokens for data sharing on Azure. These tokens were not used correctly, making the data public by accident. One SAS token was active from July 2020 to October 2021.

Another one was set to be active until 2051. SAS tokens can be risky because they can stay working for a long time, and it’s hard to control them.

How Microsoft is Addressing the Problem

Once Wiz told Microsoft about this, Microsoft acted quickly. They made the unsafe token safe and stopped people from outside from getting into the data.

Microsoft said no one took customer data without asking, so customers don’t need to do anything more.

They also made their system better at finding risky SAS tokens quickly.

The Bigger Picture: AI Security Needs Strengthening

This event shows that we must be more careful with AI tech. AI tech is growing fast, and keeping it safe is hard. Ami Luttwak, the CTO at Wiz, said that all companies need to be very careful.

They should take decisive steps to keep things like this from happening again. Data is critical, and we must keep it safe, especially when making new things in AI tech.

Microsoft said they learned from what Wiz found and have made GitHub better at spotting unsafe SAS tokens.

The company confirmed that they are taking steps to ensure such errors don’t happen again, emphasizing the importance of data security in the era of rapid AI advancements.